Computer Audio is something I admittedly take for granted. Whether I am watching YouTube videos, listening to Spotify or playing video games, audio remains a central part of my computer experience. While writing this tutorial, I hope to gain more understanding into how audio files are stored, loaded and played. As I begin writing this tutorial, I have a basic understanding of the fundamentals of audio processing but I hope that I will learn some new concepts alongside you. Given my background in Maths, I expect this tutorial to get pretty mathsy but don’t be discouraged by that! I will first and foremost try to make things understandable. I also find concepts easier to understand when I understand the background. For this reason, the tutorial will begin very physicsy.

The Plan

I hope to break down the topic into bite sized sections which will cover the following:

- Why Does Any of This Matter?

- Fundamentals of Sound

- Digital Sound

- Applications

In Part 1 of the tutorial, I will cover the first two points, with the bulk being about the fundamentals of sound. If you already know about how sound works or want to jump straight to computer audio, Part 2 will be available soon.

Why Does Any of This Matter?

I am sure this question will mean something different to each one of you. For game designers, computer audio provides you with the tools to increase the immersion into your digital worlds. For website designers, it provides the tools to alert users about posts and messages. On the surface, both of these examples are possible without thinking about sampling rates and the Nyquist frequency. But for those who are digging deeper into how things work, they become crucial. Maybe you are a developer for a game engine, web browser or operating system. Each of these require careful consideration about computer audio.

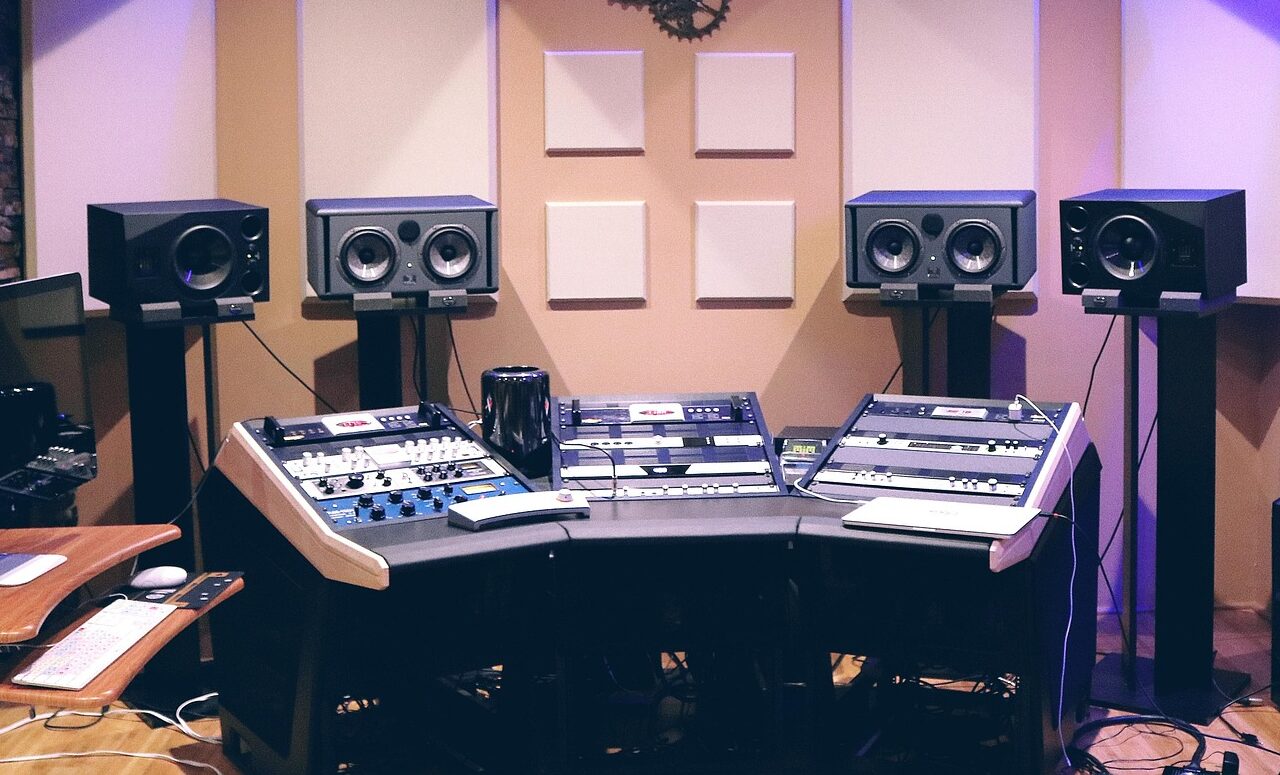

My personal interest in computer audio began in my early teens, when I began experimenting with music production and sound synthesis. Having developed a fascination with the Analog and Digital Synthesizers of the 80’s and 90’s, I began teaching myself the fundamentals of sound synthesis. This continued as I began exploring VST (Virtual Studio Technology) Instruments and looking into how to develop my own.

In recent times, I have been interested with computer audio from a HCI (Human Computer Interaction) perspective. I want to understand more about how users interact with digital audio and how the features and characteristics in digital sound files can be communicated effectively. This encompasses everything from sound wave visualisation to isolating individual features from timbre such as resonance and harmonics. To understand these interactions, we first need to review the fundamentals of sound, from waves to frequencies.

Fundamentals of Sound

Sounds are built up from combinations of mechanical waves, travelling through mediums such as air. They are produced when a physical object vibrates and causes the air pressure around the object to fluctuate.

Consider striking a thin metal plate with a hammer. When the hammer hits the plate, some of its kinetic energy is transferred into the material. This energy causes the plate to move back and forth around its resting position. The vibration is strongest close to the impact and weaker further away. In some materials, such as rubber and wood, more of the energy is converted to heat, which leads to less vibration and therefore less sound being produced.

As the plate vibrates, it interacts with the air next to its surface. When the plate moves outward, it pushes against nearby air molecules, increasing the local air pressure. When it moves inwards, it pulls away from the air, reducing the local air pressure. This alternating pattern of compression (high pressure) and rarefaction (low pressure) repeats as the plate continues to vibrate. The areas of compression and rarefaction propagate outwards as the movement of air results in the air behind/Infront also moving.

Now, consider a single air molecule a short distance away from the plate. It doesn’t just move in the direction of the sound, it will oscillate back and forth as the high and low pressure waves pass by. Effectively, each molecule pushes on its neighbours, transferring the energy through the air. This way, each individual molecule only moves a short distance but the wave propagates across large distances.

This pattern of high and low pressure is called a sound wave. The strength of the pressure changes decreases with distance because the energy spreads out in all three dimensions. This results in a lower amplitude sound wave or in other words, lower volume at further distances.

We can model this movement by using the equation f(x) = sin(x). This equation approximates the displacement of a single air particle oscillating back and forth around its equilibrium position.

Amplitude and Energy

In physics, energy can never be created or destroyed, only converted between different forms. This is why the strike from the hammer gets converted into kinetic energy and heat. The total energy level corresponds to the amount of ‘work’ that can be done by something. In the context of soundwaves, the energy corresponds to how much air can be pushed by material. The more energy a soundwave has, the more movement that occurs in the air it passes through.

The amplitude of a soundwave corresponds to the amount of movement that occurs within a pressure wave. When a material oscillates further from its resting position, more air is pushed and pulled as a result. This means that more amplitude gives the wave more energy. Furthermore, if the frequency stays the same and the amplitude is doubled, then the air needs to move twice as far in the same amount of time. The only way this can happen is if the speed of oscillations in the air increase in speed. As a result of this, increasing the amplitude increases the energy more than linearly. The most important thing to remember is that the energy of a soundwave increases rapidly as the amplitude of the wave increases.

Frequency and Wavelength

The frequency of a wave is the rate at which a full oscillation is completed. Technically, if you held the amplitude constant and increased the frequency, the energy of the wave would also increase, since the individual particles in the system must oscillate quicker to complete the full cycle in time. However, in reality, there are natural barriers to this. In order for the energy to be conserved, an increase in frequency leads to a reduction in amplitude. Similarly a decrease in frequency leads to an increase in amplitude. In the context of human hearing, the ear is much more sensitive to high frequency sounds than low frequency ones. Therefore, high frequency sounds usually have significantly lower amplitudes.

While the speed of air within oscillations can vary significantly as a result of amplitude and frequency, the movement of the wave generally has a constant speed in air. No matter how quickly the air oscillates backwards and forwards, the speed of sound in air is roughly 343m/s. This speed can be thought of as a measure for how quickly the sound propagates through the air.

The wavelength of a sound is the amount of physical distance between the start of one phase and the start of the next. i.e. its the amount of physical distance covered between the start and end of a single oscillation. In lower frequency soundwaves, the sound travels at the same speed but the oscillations move slower for the same amplitude. Therefore, the whole wave is spread over much more distance than a high frequency wave.

Wavelength is important because to create a sound, the source must be able to push the sound coherently over a region comparable to the wavelength. If the size of the source is too small compared to the wavelength, the pressure wave wont be created due to the air flowing around the speaker. As a result, low frequencies need large sources. These large sources involve large air displacement, which requires a lot of energy.

Absorption, Transmission and Reflection

As soundwaves propagate outwards from their source, they inevitably come into contact with other materials. Depending upon the type of material and the frequency, the proportion of sound energy that is absorbed, transmitted or reflected will vary.

High Frequency Waves

When a high frequency soundwave hits a rigid material, like concrete or brick, the air molecules push against the surface. The high stiffness and mass in the material prevent it from moving, so most of the sound energy is reflected back into the room. The rigid material does still move slightly, which causes the material itself to vibrate. The vibration can pass through the material, leading to the air on the other side vibrating as well. Consider singing karaoke in a room with a concrete wall. Your neighbours might still hear you!

When a high frequency sound hits a soft or porous material, like curtains or a carpet, the air is forced to pass through complex narrow gaps between the fibres in the material. Friction between the air and the fibres converts the sound energy into heat, which absorbs most of the wave. Only a small fraction is transmitted or reflected.

In reality, all materials exhibit some combination of absorption, transmission and reflection. Concrete reflects nearly 100% of incoming sound, whereas wood might reflect 80%, absorb 15% and transmit 5% on average. This is one of the reasons why concrete is used to construct walls in apartment buildings. One caveat is that the rooms can still sound echoey unless soft materials, such as carpets, are also used to absorb reflections.

Low Frequency Waves

Low frequency waves have larger wavelengths and interact with materials on a much larger scale than high frequency sounds. Soft materials, like curtains, move in response to these waves but are too light and flexible to absorb significant energy. The waves mostly pass through without being dissipated.

Rigid materials, such as floors and walls, are pushed and pulled by the large volumes of air. This causes the structures themselves to vibrate, transmitting energy to the other side and reflecting less efficiently than at high frequencies.

Low frequencies are generally really hard to absorb from the air. To reduce it, thick and dense materials can be used to prevent transmission and limit vibrations.

Hearing Sound

The previous sections are not only relevant to how sound travels through things like floors and walls but also to the human perception of sounds. The human ear is essentially a pressure sensor which can detect changes in air pressure over a range of amplitudes and frequencies (roughly 20 Hz to 20KHz).

The loudness we perceive corresponds to the amplitude of the sound wave. The bigger the amplitude, the louder the sound. The pitch we hear corresponds to the frequency of the wave. Higher frequencies are heard as higher tones, while lower frequencies are heard as bass notes. The timbre of the sound relates to the quality of a sound, such as whether it sounds soft, bright or hollow. I hope to explore this more in a future tutorial.

Our ears are very good at differentiating between subtle differences in sound waves. The next part of this tutorial will begin to explore these subtle differences by using computers to visualise the sound waves.

You can find part two of this tutorial here.

Until next time,

Emily

Leave a Reply